The Evolution of Language and Music

Cognition 100 (2006): 173-215 http://dx.doi.org/10.1016/j.cognition.2005.11.009

The Biology and Evolution of Music: A Comparative Perspective

Fitch, W. Tecumseh.

Studies of the biology of music (as of language) are highly interdisciplinary and demand the integration of diverse strands of evidence. In this paper, I present a comparative perspective on the biology and evolution of music, stressing the value of comparisons both with human language, and with those animal communication systems traditionally termed ‘‘song’’. A comparison of the ‘‘design features’’ of music with those of language reveals substantial overlap, along with some important differences. Most of these differences appear to stem from semantic, rather than structural, factors, suggesting a shared formal core of music and language. I next review various animal communication systems that appear related to human music, either by analogy (bird and whale ‘‘song’’) or potential homology (great ape bimanual drumming). A crucial comparative distinction is between learned, complex signals (like language, music and birdsong) and unlearned signals (like laughter, ape calls, or bird calls). While human vocalizations clearly build upon an acoustic and emotional foundation shared with other primates and mammals, vocal learning has evolved independently in our species since our divergence with chimpanzees. The convergent evolution of vocal learning in other species offers a powerful window into psychological and neural constraints influencing the evolution of complex signaling systems (including both song and speech), while ape drumming presents a fascinating potential homology with human instrumental music. I next discuss the archeological data relevant to music evolution, concluding on the basis of prehistoric bone flutes that instrumental music is at least 40,000 years old, and perhaps much older. I end with a brief review of adaptive functions proposed for music, concluding that no one selective force (e.g., sexual selection) is adequate to explaining all aspects of human music. I suggest that questions about the past function of music are unlikely to be answered definitively and are thus a poor choice as a research focus for biomusicology. In contrast, a comparative approach to music promises rich dividends for our future understanding of the biology and evolution of music.

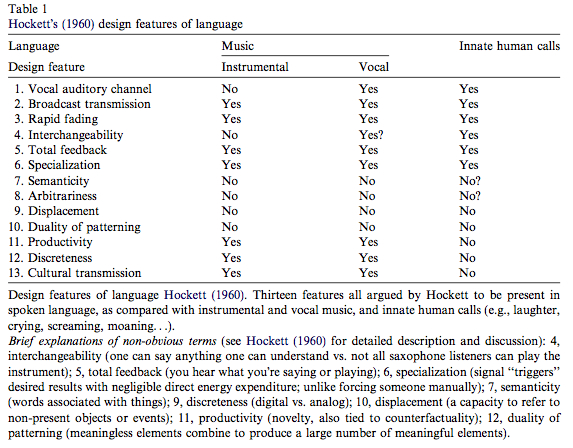

An important paper by a leading expert on the evolution of language and music, W. Tecumseh Fitch. Just like language, music occupies a central place in the human behavioral “package,” which seems to have emerged some 50,000 YBP, according to archaeology. Fitch tackles the intriguing similarities between linguistic and musical structures as forms of communication, as well as remarkable differences between them. He starts off by bringing up Charles Hockett’s “componential analysis” of language and music, according to which language and vocal music share 9 out of 13 traits (see below).

The patterned of shared vs. unique features lead Fitch to conclude that music is like “language without propositional, combinatorial meaning.” (One may add at this point that the phenomenon of using nonsense words in many tribal musical traditions fits well with this definition.)

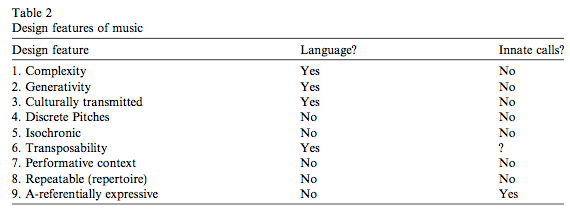

He then proceeds to reverse the terms of the comparison and look at language from the point of view of components present in music.

It’s doubtful that 7-8 in Table 2 above are differentiators, unless we apriori exclude all the performative, phatic and dramatic forms of language, such as prayers, greetings, oral literature, rhetoric and poetry, from consideration. Fitch makes this caveat, too. In both tables, on the far right, Fitch compares language and music with human innate calls (moaning, laughter, etc.) and shows how divergent language and music are from innate calls and how, comparatively speaking, close they are to each other. He arrives at an understanding that language is music without a-referential expressiveness, and that music involves discrete time and pitch, whereas language is based on continuous time and pitch. Fundamentally, language and music are sister domains, with “propositional, combinatorial meaning” defining language and discrete time and pitch defining music (in language, pitch and time are continuous, or one might want to say “muted” or “silent”). “A-referential expressiveness,” a design feature of music, but not language, underlies the universal mapping of music onto mood, on the one hand, and physical movement (dance), on the other. Again, as Fitch notes, there is an overlap with emotional expressiveness here because speech has prosodic features, sometimes called “musical” aspects of language.

It’s doubtful that 7-8 in Table 2 above are differentiators, unless we apriori exclude all the performative, phatic and dramatic forms of language, such as prayers, greetings, oral literature, rhetoric and poetry, from consideration. Fitch makes this caveat, too. In both tables, on the far right, Fitch compares language and music with human innate calls (moaning, laughter, etc.) and shows how divergent language and music are from innate calls and how, comparatively speaking, close they are to each other. He arrives at an understanding that language is music without a-referential expressiveness, and that music involves discrete time and pitch, whereas language is based on continuous time and pitch. Fundamentally, language and music are sister domains, with “propositional, combinatorial meaning” defining language and discrete time and pitch defining music (in language, pitch and time are continuous, or one might want to say “muted” or “silent”). “A-referential expressiveness,” a design feature of music, but not language, underlies the universal mapping of music onto mood, on the one hand, and physical movement (dance), on the other. Again, as Fitch notes, there is an overlap with emotional expressiveness here because speech has prosodic features, sometimes called “musical” aspects of language.

At this point, Fitch admits an oversight. He presents music as having a mapping capability onto moods and bodily movements but this capability is not matched by anything on the language side. Meanwhile, it’s commonplace to see language as being able to map on individual thought, on the one hand, and on social behavior, on the other. The latter point can be illustrated by kinship terms that in every culture define social roles and social behaviors along the lines of gender, age, genealogical line, marital status and generation. There is some crossover from language to music here as well. Call-and-response and polyphonic, multi-part singing often involves the division of the ensemble into men and women; musical instruments are sometimes classified into “male” and “female” ones. But, by and large, language offers a much more intricate means of classification of social and natural environment and much more concrete means of prescribing social behaviors.

Fitch outlines a theory of language evolution that postulates its origin from a musical “protolanguage.” The theory is not new and it was first championed by Darwin. Darwin believed that human ancestors adapted by communicating in a song-like manner. This musical protolanguage evolved into modern language, while modern music survived for purely entertainment purposes. Darwin’s speculation has been developed further by different scholars and Fitch is in the Musical Protolanguage camp. From the fact that music is known from other species (such as birds and whales), while language is unique to humans, Musical Protolanguage theorists conclude that humans added propositional, combinatorial meaning structures onto a pre-existing musical substrate. Presently, music is in free, neutral variation, which explains it’s rich varieties, while language is under positive selection.

Darwin believed that music was originally governed by sexual selection, or, in plain terms, it was used by our ancestors for courtship, just like peacocks use their tails, deer use their antlers and male birds use their birdsongs. Fitch reports that the data is inconclusive and judging my the fact that, unlike birds, in humans music is produced by both men and women without much difference, it’s unlikely that music was under sexual selection in human ancestors. But he’s willing to continue to entertain this hypothesis until solid research either disproves it or proves right.

Fitch favors kin selection to explain both music and language. I’m in complete agreement with him, as it brings a neglected domain of kinship to bear on language and music and makes room for kinship studies to inform the pathways of cultural evolution. Fitch observes that mothers and infants universally use both special music forms (lullabies) and special language forms (motherese or baby talk). Early acquisition of both language and music speaks against the sexual selection hypothesis but fits nicely with kin selection. Infants showed preference for song over speech, singing is universally used to put children to sleep, to strengthen the mother-child bond and to focus their attention, hence the role of music in controlling infant arousal and mood is well borne out by facts. In another paper (Fitch, W. Tecumseh. 2004. “Kin Selection and “Mother Tongues”: A Neglected Component in Language Evolution,” Evolution of Communication Systems: A Comparative Approach, edited by D. K. Oller and U. Griebel. Pp. 275-296. Cambridge, Massachusetts: MIT Press.), Fitch suggested that the original human language was a “mother tongue” in a sense that it developed for communication between kin (beyond mothers and infants) because, considering the prolonged childlhoods characteristic of humans, the benefits to the receiver of the information are so much greater than the cost of language to the signaler that the Hamilton rule for inclusive fitness is easily satisfied. Finally, the Kin Communication hypothesis makes use of the fact that forms of speech are good identifiers of the speaker’s ethnicity, which may harken back to the Pleistocene times when humans were subdivided into much more granular social groups such as families, moieties and clans. One could always recognize a kin by the way s/he spoke.

There is a glaring gap in all of Fitch’s research papers on the evolution of music and language. He gives only a passing credit to comparative ethnomusicological evidence from modern human populations and uses none of the many studies in linguistic typology. It’s true that ethnomusicology shuns away from “music universals” and evolutionary approaches to music. In the 20th century, synchronic approaches dominated both linguistics and ethnomusicology, but linguistics managed to maintain and advance both its phylogenetic and its typological methods. Alan Lomax’s cantometrics stands out as one of the very few contributions to evolutionary ethnomusicology over the last 50 years. As Lomax (Lomax et al. 1968. Folk Song Style and Culture. Washington: National Association for the Advancement of Science, p. 3) described it,

“The cantometric system sets up a behavioral grid upon which all song styles can be ranged and compared. The grid…was designed…to rate [musical performances] on a series of scales (loud to soft, tense to lax, etc.) taxonomically applicable to song performance in all cultures. Thus song can be compared to song, song to speech, and hopefully to other aspects of behavior.”

Clearly, Lomax’s project is right up Fitch’s alley. Essentially, cantometrics is a comprehensive componential analysis of musical styles. It came into vogue in the mid-1950s and was applied to kinship terminologies and other semantic domains, and even, as we can see, to musical styles. (Hockett’s identification of language’s design features above is also componential analysis and it reflects Hockett’s involvement in componential studies of kinterm semantics in the early 1960s.) An example of Lomax’s coding sheets that nicely illustrates his method is shown below:

Lomax clustered human populations into geographic regions showing strong similarities in their musical styles as defined by cantometrics (see below, Fig. 2, from Lomax, Alan. “Factors of Musical Style,” Theory and Practice: Essays Presented to Gene Weltfish, edited by Stanley Diamond. The Hague: Mouton, 1980, p. 39).

He interpreted it as a tree with two roots. One root in Siberia/Patagonia, with immediate offshoots into a) Nuclear America and Circum-Pacific (South America, North America, Australia, Melanesia) and thence into Oceania and Melanesia and into East Africa; b) Central Asia and thence to Europe and Asian High culture. The other root in African hunter-gatherers (Pygmies and Bushmen) with an offshoot in Early Agriculture. The Siberian-Patagonian style and its derivatives is characterized by “male-dominated solos or rough unison choralizing, by free or irregular rhythms and by a steadily increasing information load in various parameters – in glottal, then other ornaments, in long phrases and complex melodic form, in increasingly explicit texts and in complexly organized orchestral accompaniment” (Lomax 1980, 39-40). The Pygmy-Bushmen style and its derivatives are polyphonic, interlocked, more feminized (or at least with a balance of men and women in the ensemble), regular in rhythm, repetitious, melodically brief, cohesive and well-integrated, without ornamentation, with meaningless vocables and frequent yodeling.

The world of traditional music is, therefore, sharply divided along the axes of male vs. female, monophonic vs. polyphonic, rhythmically regular vs. irregular, solo vs. multi-part, language-friendly vs. meaningless. But the macroareas defined by the Siberian-Patagonian and Pygmy-Bushmen roots are overlapping, with examples of Pygmy-Bushmen vocalizing popping up sporadically in Lithuania, Georgia, Vietnam, Papua New Guinea and Amazonia. One gets an impression that the Pygmy-Bushmen style is a relic survival in poorly accessible geographic areas such as the Ethiopian Highlands (Dorze), the Caucasus (Svan), Papua New Guinea and the Amazonian rainforest.

In the book entitled “Who Asked the First Question? The Origins of Human Choral Singing, Intelligence, Language and Speech” (Logos, 2006), a prominent Georgian ethnomusicologist, Joseph Jordania, expanded on Darwin’s Musical Protolanguage theory by arguing that all hominid species beginning with Homo erectus communicated by means of polyphonic, multi-part, interlocked music. Homo sapiens expanded out of Africa without possessing articulated speech. Correspondingly, we see traces of the Pygmy-Bushmen style everywhere around the globe, including such remote areas as Papua New Guinea and South America. After the initial expansion of music-minded Homo sapiens, it’s regional varieties began developing articulated speech independently of each other. Just like thousands of years later they would begin developing agriculture in a small number of centers in the Middle East, China, Papua New Guinea and the New World from where the new economic type spread by diffusion and migration. Jordania (2006, 349) wrote,

“After the advent of articulated speech musical (pitch) language lost its initial survival value, was marginalized and started disappearing. Articulated speech became the main communication medium in human societies. Early human musical abilities started to decline. The ancient tradition of choral singing started disappearing century by century and millennia by millennia. Musical activity, formerly an important part of social activity, also started to decline and became a field for professional activity.”

This is completely in line with Fitch’s thinking, plus it translates into concrete musical traditions to allow one to begin thinking about the possibility of the evolution of music into speech as a historical process, not as a theoretical abstraction. What uniquely characterizes Jordania’s position is his belief that human populations outside of Africa began switching to articulated speech earlier than African (and to a certain degree European) populations. Levels of linguistic diversity accurately reflect this process: in the areas such as the New World and Papua New Guinea linguistic diversity is exorbitant. America counts 140-150 linguistic stocks with no demonstrated kinship between them. Africa and Europe, on the other hand, have very low diversity when defined as the number of independent stocks. Africa has no more than 20; Europe around 10 (with Pictish, Etruscan and other extinct dialects).

Jordania’s hypothesis carries with it very specific predictions for human population history. Modern humans must have expanded out of Africa in Mid-Pleistocene prior to the emergence of clear signs of modern human behavior in the archaeological record. The discovery of a fully modern chin in Zhirendong (South China) at 100,000 BP agrees with this. Assuming that Upper Paleolithic technologies were enabled by the same symbolic capacity as the newly evolved faculty of speech, the Middle-to-Upper Paleolithic transition must have happened independently in different parts of the world. We do have plenty of evidence for this: in Australia and Southeast Asia the modern human “behavioral package” is poorly visible until the Holocene, the transition from Middle Stone Age to Late Stone Age occurred in South Africa around 45,000 BP without any apparent connection to the MP-UP transition in Europe that also began around the same time. The lack of archaeological indicators of modern human behavior in association between “anatomically modern humans” in Africa supports the notion that they lacked the symbolic capacity that produced language.

Up until now, Jordania’s model seems like it could work without coming into conflict with archaeology and paleobiology. But several problems loom large. The New World must have been peopled during the very first Mid-Pleistocene wave out of Africa because the Pygmy-Bushmen style is well-attested in South America. Not only that it’s well-attested, it’s attested as a unique form unknown in the Old World called by Victor Grauer “canonic-echoic” (see below). If a “mutation” affected not a derived tradition but a “basal clade,” then this mutation must be old. But archaeology presently cannot support such an early entry into the New World. This may not be the biggest objection to Jordania, though, because under my out-of-America model the paucity of artifacts in the New World prior to 15,000 YBP is not indicative of the lack of human presence but rather of small population size and a “non-invasive” ecological adaptation. More importantly, and it’s here that Jordania’s model begins to fall apart, if the New World, the Sahul and parts of Asia – all having elevated levels of linguistic diversity – shifted to language earlier than Africans and Europeans, then why don’t we see the proliferation of definitive signs of modern human behavior in the archaeological record of Australia, Papua New Guinea and the New World after 100,000 BP? They are much more readily available in Africa and Europe than in Australia, Papua New Guinea and the New World. Finally, Jordania’s insight implies that language evolved multiple times in one single hominid species (Homo sapiens sapiens). Fitch’s position, on the contrary, is that, while music evolved multiple times in different taxa (whales, birds, monkeys, humans), language is uniquely human, hence it must have evolved only once. It’s unlikely that such a complex system as language equipped with propositional, combinatorial semantics evolved multiple times in the past 100,000 years but hardly ever before. But the elegance with which Jordania’s model fits with the Musical Protolanguage theory and explains the vastly different levels of linguistic diversity in Africa vs. the New World keeps me awake.

Victor Grauer, Alan Lomax’s one-time student and research assistant, has recently returned to active ethnomusicological research and decided to convert Lomax’s two-root tree of musical styles into a single-root tree which would reflect the population genetic trees produced in support of recent out-of-Africa model of human dispersals. He started off by re-arranging Lomax’s diagram above into a more clearly bifurcating tree and by renaming Lomax’s musical styles into a mix of descriptive labels (the Siberian-Patagonian root became “breathless solo,” Australian style “iterative one-beat,” the Amazonian version of the Pygmy-Bushmen style “canonic-echoic,” etc.) and number-letter combinations inspired by the “haplotypes” of population geneticists.

The resulting single-root tree mimicked genetic phylogenies by showing a) the radical divergence of the Pygmy-Bushmen style of vocalizing from the rest of human musical styles; and b) the progressive loss of the rich properties of the Pygmy-Bushmen style (polyphony, open-throated singing, interlock, yodeling, etc.) as consequence of serial founder effects befalling humans on their journey out of Africa and into the Americas.

Primitive polyphony turned into monophony, multi-part singing into solo and unison, open-throats yielded to breathless, constricted and coarse vocalizing, etc. At the same time, new properties emerged (from Jordania’s point of view, under the influence of growing and positively selected speech) where previously there were none: for example, meaningless vocables turned into long phrases. As with Jordania, Grauer’s model makes sense but doesn’t have a proving power. At closer examination, it begins to conjure doubts. First, the compatibility between genetic trees and Graeur’s musical tree is far from perfect: in genetic trees, Pygmies and Bushmen don’t share a clade. Instead, as in Y-DNA, Pygmies fall into the same clade (BT) with the rest of humans, while Bushmen are outliers. If genes and music mapped well onto each other, we would expect Pygmies to share more cantometric properties with the rest of humans but not with Bushmen. But Grauer (“Concept, Style, and Structure in the Music of the African Pygmies and Bushmen: A Study in Cross-Cultural Analysis,” Ethnomusicology 53 (3), 2009) insists that Pygmies and Bushmen belong to the same musical tradition. Second, in most genetic trees, American Indians occupy downstream clades and it’s widely believed that the New World was colonized much later than other continents. But on Grauer’s tree we have American Indians represented in all clades (from shouted hocket in Hupa to breathless solo in Patagonia to iterative one-beat in North America). Third, Pygmy-Bushmen style has clear parallels in Papua New Guinea and South America, but genetic trees do not document any haplotype sharing between Sub-Saharan Africa, Papua New Guinea and Amazonia or the Andes. Finally, since the publication of Grauer’s tree of musical styles, new genetic evidence (Denisovan and Neandertal admixture in modern humans) has falsified the recent out-of-Africa with serial founder effects model of modern human evolution, thus leaving Grauer without the solid foundation in “hard data” that his theory once enjoyed.

Genetics aside, there is nothing in ethnomusicological data per se that necessitates an out-of-Africa reading of the distribution of modern human musical traditions. First, the derived nature of monophony cannot be clearly documented. Australia is completely monophonic without any trace of polyphony. The fact that Pygmy-Bushmen style tends to survive in isolated pockets and geographic refugia does not mean that more wide-spread styles are all derived from it. It only suggests that Pygmy-Bushmen style may have been more prominent in the past. Just like “breathless solo” or “iterative one-beat,” also confined to relic areas, could have been. Second, although both Jordania and Grauer claim to have walked away from the old-fashioned evolutionary model of musical evolution from simple to complex, the subjugation of the whole of human musical history to one single trend of disintegration of a bundle of properties attested in African foragers sounds like the revival of evolutionism in its most primitive form. Third, if, as Fitch argues, music and language share the same kin communication base, then monophony cannot be a derived trait because lullabies (presumably primordial among humans) are of necessity monophonic. Hence, monophony and polyphony can both be ancestral because confined to different domains of human life. Fourth, many archaic monophonic traditions (such as “iterative one-beat” found in Australia and North America or breathless solo – the other root in Lomax’s model) are tightly linked to drumming, and drumming is an ancestral trait in humans homological with drumming in chimpanzees (Fitch 2006, 194-195). At the same time, polyphonic singing is often associated with complex musical instruments such as panpipes (again, attested from Sub-Saharan Africa to the Andes). Archaeologically, the earliest attested instruments (flutes dated at 36,000 years in Europe) are naturally simple. This may indicate that both vocal and instrumental polyphony must have undergone considerable progressive evolution since the Late Pleistocene. Fifth, it’s unclear if monophony and polyphony are as starkly differentiated as Grauer often portrays them. For instance, meaningless vocables are widely found in North America (tribes there were even known to outsiders by their most popular nonsense words) but solo and unison vocalizing dominate there. The loose and flexible structure of Amazonian “canonic-echoic” (from Grauer’s A clade) echoes the “free or irregular rhythms” of “breathless solo” (Grauer’s B clade). In fact Grauer acknowledges that there is a crossover between the two divergent musical styles taking place not in Africa, but in the Circumpolar region.

“While B2 seems in many ways almost the opposite of any of the African A styles, there are some very interesting points in common, as indicated by the scored lines linking B2 with A2. The two styles are both characterized by yodel or yodel-like vocalizing (especially in the “joik” songs of Lapland, but also reflected in the heavy glottalization found throughout this style area), continuous vocalizing (interrupted by gasps for breath), wide intervals, and an emphasis on “nonsense” vocables.”

Sixth, the change from a more loose and flexible structure of “canonic-echoic” toward more regimented Pygmy-Bushmen style seems to be more natural than the change in the opposite direction. This is consistent with the fact that Hadza, an African isolate with a population structure strikingly different from anything known in Africa but similar to that found in the New World and in Papua New Guinea, vocalize in a loosely coordinated fashion. (Grauer uses the same logic to argue that Pygmy kinship systems are more archaic compared to Bantu.)

The key issue that the Musical Protolanguage theory needs to solve is to explain the origin of propositional, combinatorial meaning. This is what music doesn’t have. The solution the Musical Protolanguage proposed is a clever one. Instead of imagining the evolution of language as a change from simple words to complex grammatical structures, one could reverse the logic and attribute to music’s a-referential, emotional expressiveness a richer meaning than referential meaning found in language. Fitch notes that this idea goes back to the famous Danish linguist Otto Jespersen who argued that human language evolved from a musical communication system in which whole propositional meanings were attached to entire musical phrases. The components of these sound strings were not stitched together according to particular rules and there were no “words” in our sense of the word. The listener comprehended these phrases as coherent wholes without analyzing them into constituents. The revolutionary cognitive shift that resulted in the separation of music from language involved the process of analyzing these synthetic phrases into constituent parts (syllables and words) and the attaching specific semantic meaning to the individualized formal elements.

“What in the later stages of languages is analyzed or dissolved, in the earlier stages was unanalyzable or indissoluble; ‘entangled’ or ‘complicated’ would therefore be better renderings of the first state of things” (Jespersen, Otto. Language: Its Nature, Development and Origin. London, 1922).

Cognitive linguist Alison Wray (Wray, Alison. 2000. “Holistic Utterances in Protolanguage: The Link from Primates to Humans”. In The Evolutionary Emergence of Language: Social function and the origins of linguistic form, edited by C. Knight, M. Studdert-Kennedy, and J. R. Hurford. Pp. 285-302. Cambridge: Cambridge University Press.) rediscovered Jespersen’s insight and adduced additional evidence from child acquisition, adult speech and neuroscience in its support. See more here.

Jespersen’s approach to language evolution parallels Jordania’s and Grauer’s approach to musical evolution: all these scholars reverse the simple-to-complex mantra of the classical evolutionist thought. But by applying the same logic to language vs. music they end up discovering the relics of their postulated ancestral condition in two opposing corners of the world. According to Jespersen, among modern languages, polysynthetic or incorporating languages employ a holistic approach to meaning most consistently. The 19th century linguist Peter S. Duponceau (Report of the Corresponding Secretary. Read 12th January, 1819. Transactions of the Historical and Literary Committee of the American Philosophical Society I, 1819), who coined the term “polysynthetic” described them as

“a mode of compounding locutions which is not confined to joining two words together, … but by interweaving together the most significant sounds or syllables of each simple word, so as to form a compound that will awaken in the mind at once all the ideas singly expressed by the words from which they are taken.”

Polysynthetic languages are ubiquitous in the New World and rare in the Old World. In the Old World polysynthetic languages are found mostly in Papua New Guinea and the Caucasus – the two regions characterized just like the New World by extensive linguistic diversity. So, while Grauer describes most of the New World as dominated by solo and unison musical traditions, which are significantly removed from the presumably original Pygmy-Bushmen style (“canonic-echoic” being a rare exception), linguistically American Indians seem to be the closest to the musical stage in the evolution of human communication. This is all assuming Musical Protolanguage theory is correct.

This is all very interesting, both the article itself (as far as I can tell from your review) and what you’ve written about the topic generally. Unfortunately the article is behind a paywall and I just can’t afford to pay $30 or more for everything that interests me.

Language and music certainly share a great deal, though Fitch seems to leave out the most striking connection, from a semiotic viewpoint, i.e. the presence in both of second articulation. And from a Saussurean point of view, the importance of value. Does he mention either in the paper?

The notion of a musical protolanguage was also put forward by my colleague Steven Brown (co-editor and author of “The Origin of Music”). He calls it “musilanguage” and I discuss this in my book. I also discuss the relation of music to Darwinian adaptation in my other blog, starting here: http://music000001.blogspot.com/2010/08/332-did-music-originate-as-behavioral.html

Your comment regarding the “glaring gap” in Fitch’s research is very much welcome. As I see it comparative musicological studies of world music are essential to this type of research yet this aspect is almost always ignored, as though Western music were representative of music at all times and places.

I’m also pleased to see a discussion of Cantometrics and also of my own work, which was of course heavily influenced by Lomax and his ideas.

I don’t have time or space to do justice to your complex analysis or to respond fully to some of the problems I have with some things you’ve written. But I’d like to say a few words regarding your comments on Jordania’s ideas.

I tend to reject the notion that language could have resulted from multiregional convergence, as he believes, and possibly you as well (?). But you make a point I haven’t considered before. If archaic humans were already vocalizing musically prior to the earliest Out of Africa migration, the one that involved Homo Erectus among others, then perhaps all the essential ingredients of speech were already present as they dispersed to Asia and Europe.

So the step from musical vocalizing to articulated speech might not have been such a huge leap after all, suggesting that if not “musi-language” then at least articulated speech might have been independently invented in the various regions.

I’m still skeptical. But a bit less so than before, so thank you.

I’d be cautious, however, in comparing the independent invention of agriculture to the possible independent invention of language/speech. There’s no question agriculture was invented separately in different parts of the world at different times. But it is by no means a universal, as is language. The idea that language, or if you prefer, articulated speech, could have developed independently in so many different places due to some innate force of convergence is very close to the sort of teleological thinking that’s been thankfully left behind by most evolutionists.

Victor, I e-mailed you the Fitch paper. I hope I still have your valid e-mail address.

Regarding Jordania, I left the post a bit open-ended without imposing my own perspective that much. I can’t accept his thesis that language emerged multiple times in human history and I argue against it using Fitch’s logic that language is more specific and unique than language, hence it must have evolved only once in humans. At the same time, his solution to the puzzle of low linguistic diversity in Africa and Europe and high linguistic diversity in the New World, PNG and parts of Asia is what one if forced to consider if one wants to stay with out-of-Africa thinking and not simply close one’s eyes to the linguistic situation. However, neither Jordania’s solution, nor the outright dismissal of the linguistic situation as epiphenomenal are satisfactory, hence one is left with strong doubts regarding an out-of-Africa scenario.

If we change the time scale dramatically and start with the Homo erectus times (again, some researchers argued that Homo erectus may have migrated into Africa rather than the other way around), we do apparently get the fundamental building blocks of human cognition and sociality at that time, but then the question loom large – why didn’t tool evolution take off much earlier than Upper Paleolithic? This question doesn’t arise if language is conceived of as having evolved once and recently (around 50K) because then lithic improvements are logically related to the new symbolic capacity.

Not to mention the fact that we still need a much longer presence of humans in the Americas to justify its linguistic diversity.

Thanks so much for sending the pdf, German. I’m reading it now. If you email me with your address I’ll be happy to order a hard copy of my book for you.

As far as the music-language relation is concerned, I see that Fitch does not in fact seem to appreciate the importance of either second articulation or value as links joining the two quite strongly. Second articulation, which in speech produces phonemic organization, is an essential aspect of music, providing us with both “tonenemes” the tonal values (i.e., notes,) and “rhythmemes,” the rhythmic values, such as quarter note, half note, etc.

Thanks to second articulation, a small number of fundamental elements can produce a wide range of different expressions or communications, suggesting that second articulation must have preceded first articulation (the articulation of morphemes), which in turn provides us with a strong argument for music’s historical priority over speech. So the question is: how much of a leap is it from the development of second articulation in music to the combination of such elements to produce the morphemes of speech?

And if things developed this way, does it make sense to assume that tone languages would have preceded non-tone languages? If that were the case, then that would be a very strong argument for the origin of language in Africa, where tone languages are far more common than anywhere else?

In some Bantu languages, for example, it’s possible to communicate a great deal simply by whistling, or via the “talking” drum. Could we consider this type of communication a kind of “missing link” between music and speech?

Also as I pointed out on my blog, tuned pipes, which as we know from archaeological research as well as logical inference, are among the oldest musical instruments, already function symbolically, as a kind of musical notation, since each pipe “stands for” a unique tone and the ensemble of such pipes represents a scale. One could in principle denote a melody simply by pointing from one pipe to the next.

“does it make sense to assume that tone languages would have preceded non-tone languages? If that were the case, then that would be a very strong argument for the origin of language in Africa, where tone languages are far more common than anywhere else?”

As far as I can see, there are two ways into “language” from “music”: one is via “tone languages,” the other via “polysynthetic languages.” Geographically, they seem to be in complementary distribution. PL are found in the New World, Paleosiberia, PNG, Australia, Caucasus, Basques, but not in Africa or mainstream Eurasia. Tone languages are pervasive in Africa and Southeast Asia and are only sporadically found in America (Oto-Manguean) and Papua New Guinea.

I haven’t seen anybody else arguing for inherent incompatibility between tonal and polysynthetic languages, so it’s just my own observation, but the fact that in Southeast Asia all tonal languages are grammatically isolating (isolating language are at the opposite extreme from PL) makes me think there’s something in here. It must be hard to assign a tone to an individual syllable or a word if they are all tightly integrated into an unwieldy sentence-like construction.

There are a couple of things that linguists have established that’s relevant to our concerns here:

1. Tonal languages in Africa and Southeast Asia are very different from each other. They are not the same type. There are languages in SEA that display “African” tones but there are no languages in Africa with “SEA” tones.

2. Tonogenesis is common, tonoexodus is rare. There are many documented cases of tonogenesis outside of Africa. There are no known drivers of tonogenesis in Africa.

This suggests to me that it’s unlikely that the “first” human language was tonal and that tones have then been lost from many languages. It’s likely the opposite: tones can be created in a language at any time as a compensatory prosodic mechanism that kicks in after the loss of a consonant. Sometimes tones remain small in number and may get lost, other times they develop into a complex system. Outside of Africa, languages have been going back and forth on tones for a long time, while one or two of those tonal “experiments” arrived into Africa and got “fixed” there. On the contrary, polysynthetic languages are quite unique – there are many examples of polysynthesis loss, as in Eskimo-Aleut or Aztecan language families, but there are very few, if any, examples of polysynthesis evolving in, say, agglutivative or flective languages.

Tonal languages are very frequent. Basically, most languages have some tones in some form. If it were a residual feature, we would have seen it in world languages as a curiosity, rather than as a system. If I’m right and polysynthesis carries a constraint on tones, then the relaxation of such constraint would have created an opening for tones to evolve. As languages shifted even more toward the analytical pole (isolating languages in SEA as well as African languages are analytical), tones evolved into a very complex system.

“second articulation must have preceded first articulation”

Although Fitch doesn’t touch on second and first articulation, his (and others) belief that language evolved from holistic units of meaning to discrete units of meaning makes me think that he would prioritize first articulation over second articulation, instead. I suppose second articulation in language and music can be seen as parallel but independent ways to structure the holistic messaging that musical protolanguage operated with.

“In some Bantu languages, for example, it’s possible to communicate a great deal simply by whistling, or via the “talking” drum. Could we consider this type of communication a kind of “missing link” between music and speech?”

I’m interested in whistled languages, too (http://anthropogenesis.kinshipstudies.org/2012/03/piraha-indians-recursion-phonemic-inventory-size-and-the-evolutionary-significance-of-simplicity-4/). Language with very limited phonemic inventories (such as Piraha in South America) have a system of whistled sounds. For me whistled sounds are on the same test-bench as click sounds. Khoisan languages fully integrated clicks into their phonemic inventory, while in most other languages they are para-linguistic. I speculated on your blog (http://music000001.blogspot.com/2009/12/264-baseline-scenarios-40-gap.html) that phonemic clicks share with throat singing such essential process as ingression. If the ancestors of Khoisan had throat singing similar to Siberian one, they may have noticed that the same breathing process can generate linguistic sounds. Later, throat singing went into disfavor, but clicks survived and prospered.

Music and language have been coevolving for millenia. It would be strange not to see them influence each other.

“each pipe “stands for” a unique tone and the ensemble of such pipes represents a scale. One could in principle denote a melody simply by pointing from one pipe to the next.”

I think you just nailed the earliest documented instance of writing!

Thanks, German, for this very thorough and interesting response. From what you say, it looks like there are two ways of thinking about parallels between music and language: 1. the presence in both of second articulation, which emphasizes the smallest units; 2. the parallel between the continuous musical phrase and what Fitch apparently regards as the earliest utterances of speech, which as you suggest would tend to be polysynthetic. Definitely food for thought.

As far as tone language is concerned, my problem is that I have looked and looked in the literature and to this date never found any instance where a logical argument for the priority of non-tonal languages is ever presented. This seems simply to be taken for granted, which makes it sound suspiciously ethnocentric, i.e., based on the notion that European languages represent the norm.

Additionally, I must say I find it difficult to accept studies that supposedly demonstrate tonogenesis, since once again non-tonality is always assumed to be the norm and the inferred process is thus based on an assumption rather than a critical analysis. I also wonder how it is possible to determine whether a particular language was “originally” tonal or non-tonal thousands of years ago.

Much in this type of linguistics strikes me as overly technical, based on arcane terminology accumulated over many years and I wonder how much of it has been subject to serious critical review. Too often I see sentence after sentence based on taken for granted terms and concepts accepted at face value rather than systematically argued.

It’s almost as though they are creating a barrier between themselves and anyone outside their field, and I wonder why. If you can point me to a book or paper on tonogenesis that presents a coherent argument designed for non-specialists I’d appreciate it.

Victor,

I did some additional digging around in linguistic literature to get a better answer to your questions. Here’s what I can say.

1. The Eurocentric bias is something that needs to be taken into account. The exceptional importance of tones in African languages is something that wasn’t fully recognized until some 30 years ago, and even 15 years ago I witnessed an Africanist ethnolinguist denying the phonological significance of tones in West African languages in a discussion with descriptive linguists.

2. I haven’t seen this bias against tones affecting the studies of tonogenesis in non-African languages. (As I wrote earlier, there are no known cases of tonogenesis in Africa.) There are just very clear and unambiguous cases of tonogenesis in non-African languages and these cases are interpreted as tonogenesis because of the structure of the languages in question, not of a Eurocentric bias. The reason why linguists conclude that tones are secondary in some of these language families is because of some very transparent connections between consonant loss and compensatory tone formation in individual languages. For instance, among Athabascan languages only two, Chipewyan and Gwichin have tones. All other languages (including most of Northern Athabascan languages of which Chipewyan and Gwichin are part) have traces of what is known as suprasegmental constriction, or mild glottalization that sounds like creaky voice. back in the day, before detailed descriptions of Athabascan languages became available, linguists played with the idea that Chipewyan and Gwichin represent the original proto-Atahbascan tone phonation. But then several linguists proved the opposite. A detailed discussion can be found at http://books.google.com/books?id=X7ZBprpCbZEC&pg=PA143&lpg=PA143&dq=chipewyan+gwichin+tones&source=bl&ots=ElJ6h9vSwM&sig=oSWzBecLSB0sYHQQx4TyMzrTZo8&hl=en#v=onepage&q=chipewyan%20gwichin%20tones&f=false

A couple of highlights. Glottalized consonants are very common across all of the Athabascan range and are securely reconstructed for proto-Athabascan. A glottalized consonant outside of Chipewyan and Gwichin corresponds to two different tones in these languages – a low one in Gwichin and a high one in Chipewyan. So, there’s no underlying commonality between Chipewyan and Gwichin, which could be interpreted as retention from an ancestral state. Rather, tones evolved in the two languages independently from a common base in glottalized consonants and the resulting suprasegmental constriction.

BTW, the Athabscan case of tonogenesis reminded me of Nettle’s argument that in North America there are isolated cases of drone polyphony in a sea of monophonic traditions, and he interpreted this distributional evidence as pointing to drones in North America as “incipient polyphony,” rather than as retentions from contrapunctal polyphony.

3. The Athabascan example shows that linguists established the fact of tone instability in languages outside of Africa using comparative method and proto-linguistic reconstructions. (Another good case study is tonogenesis in Vietnamese.) This means that it’s as secure as it gets but only when we are talking about the processes that took place in the past 5,000-10,000 years. Linguists don’t have a reliable methodology to tell us anything about tonogenesis prior to that, or in those hypothetical megaphyla such as Dene-Caucasian or Nostratic. The only thing they are certain about is that outside of Africa tones are not stable or conservative. They are found in widely separated language families and in individual languages within a family suggesting that they must have been products of multiple venues of independent evolution in the past 5,000-10,000 years. They are compensatory mechanisms that originally exist as unintended side-effects of laryngeal and glottalic articulations but then step in to fill in the gaps and expand into 5-7 tones systems once those laryngeal and glottalized consonants get washed out of the system.

4. Unfortunately, I haven’t found a paper or a book that would deal with tonogenesis from the macrodiachronic perspective that you and I are working with. Neither did I encounter a paper that deals with tonogenesis in a very simple way. Childs has a useful discussion at http://books.google.com/books?id=2wMX6tJQFBMC&pg=PA86&lpg=PA86&dq=language+evolution+tonogenesis&source=bl&ots=CMx1z1MlJE&sig=K0to1ZvTd6Tzzl-MkVuQqFcQM5A&hl=en#v=onepage&q=language%20evolution%20tonogenesis&f=false.

5. So, it’s possible that we’re dealing here with two different tonal phenomena. They are look-alikes, with no historical continuity between them. It’s possible that African languages retained tones from musical protolanguage. But tones outside of Africa are not decaying African tones but independent innovations from very non-tonal languages. Let’s call it a compensatory tone phenomenon. It’s possible that the African musical tone phenomenon got lost everywhere outside of Africa as people migrated out prior to 10,000 years, and linguistics can’t say categorically that it did not happen. What it can say that outside of Africa attested tonal systems tend to be derived from non-tonal systems.

These empirical findings could be applied to African tones. The only difference is that African tones must have emerged from a non-tonal situation prior to 10,000 years because there are no traces of tonogenesis in the African language families usually attributed to the 5,000 year time horizon. This would mean that the similarities between African tones and musical tones stem not from common descent but from independent innovation or influence of musical tones on linguistic tones.

But these are informed speculations and linguistics has little to offer to prove or disprove them with the degree of certainty that you and I look for.

Victor,

There does seem to be some correlation between complex tones and isolating morphology (at least in Asia), as you can see from the deck “Morphological Typology” at http://www.sfs.uni-tuebingen.de/~gjaeger/lehre/ws1011/languages_of_the_world.html. But it can also be “accidental areal phenomenon,” so the connection has been noted but not investigated in-depth.

Hi German, a thousand thank yous for this article. I am trying to find a masters program that would allow me to study music from a comparative approach, in the same way it was approached in this article. The origins of music, music mapping, etc. interest me very much. Would you happen to know an institution or a professor teaching such topics? Thank you all so much for your kind help.

If you’re looking for professionals in the U.S., you should write to Victor Grauer. He’ll point you further. Just write him a comment at http://music000001.blogspot.com/. Outside the U.S., I recommend getting in touch with Joseph Zordania who teaches in Australia.